type

Post

status

Published

summary

简介不了

slug

reship-text-20240810

date

Aug 10, 2024

tags

方法

category

好文转载

password

icon

URL

Property

Apr 18, 2025 02:12 PM

本文转载自:Crony Beliefs by Kevin Simler

Credits up front: This essay draws heavily from Overcoming Bias, Less Wrong, Slate Star Codex, Robert Kurzban, Robert Trivers, Thomas Schelling, and Jonathan Haidt (among others I'm certainly forgetting). These are some of my all-time favorite sources, so I hope I'm doing them justice here.

—

For as long as I can remember, I've struggled to make sense of the terrifying gulf that separates the inside and outside views of beliefs.

From the inside, via introspection, each of us feels that our beliefs are pretty damn sensible. Sure we might harbor a bit of doubt here and there. But for the most part, we imagine we have a firm grip on reality; we don't lie awake at night fearing that we're massively deluded.

But when we consider the beliefs of other people? It's an epistemic shit show out there. Astrology, conspiracies, the healing power of crystals. Aliens who abduct Earthlings and build pyramids.

That vaccines cause autism or that Obama is a crypto-Muslim — or that the world was formed some 6,000 years ago, replete with fossils made to look millions of years old. How could anyone believe this stuff?!

No, seriously: how?

Let's resist the temptation to dismiss such believers as "crazy" — along with "stupid," "gullible," "brainwashed," and "needing the comfort of simple answers." Surely these labels are appropriate some of the time, but once we apply them, we stop thinking.

This isn't just lazy; it's foolish. These are fellow human beings we're talking about, creatures of our same species whose brains have been built (grown?) according to the same basic pattern. So whatever processes beget their delusions are at work in our minds as well. We therefore owe it to ourselves to try to reconcile the inside and outside views. Because let's not flatter ourselves: we believe crazy things too. We just have a hard time seeing them as crazy.

So, once again: how could anyone believe this stuff? More to the point: how could we end up believing it?

After struggling with this question for years and years, I finally have an answer I'm satisfied with.

BELIEFS AS EMPLOYEES

By way of analogy, let's consider how beliefs in the brain are like employees at a company. This isn't a perfect analogy, but it'll get us 70% of the way there.[1]

Employees are hired because they have a job to do, i.e., to help the company accomplish its goals. But employees don't come for free: they have to earn their keep by being useful.

So if an employee does his job well, he'll be kept around, whereas if he does it poorly — or makes other kinds of trouble, like friction with his coworkers — he'll have to be let go.

Similarly, we can think about beliefs as ideas that have been "hired" by the brain.

And we hire them because they have a "job" to do, which is to provide accurate information about the world.[2] We need to know where the lions hang out (so we can avoid them), which plants are edible or poisonous (so we can eat the right ones), and who's romantically available (so we know whom to flirt with).

The closer our beliefs hew to reality, the better actions we'll be able to take, leading ultimately to survival and reproductive success. That's our "bottom line," and that's what determines whether our beliefs are serving us well. If a belief performs poorly — by inaccurately modeling the world, say, and thereby leading us astray — then it needs to be let go.

I hope none of this is controversial. But here's where the analogy gets interesting.

Consider the case of Acme Corp., a property development firm in a small town called Nepotsville. The unwritten rule of doing business in Nepotsville is that companies are expected to hire the city council's friends and family members.

Companies that make these strategic hires end up getting their permits approved and winning contracts from the city. Meanwhile, companies that "refuse to play ball" find themselves getting sued, smeared in the local papers, and shut out of new business.

In this environment, Acme faces two kinds of incentives, one pragmatic and one political. First, like any business, it needs to complete projects on time and under budget. And in order to do that, it needs to act like a meritocracy, i.e., by hiring qualified workers, monitoring their performance, and firing those who don't pull their weight. But at the same time, Acme also needs to appease the city council. And thus it needs to engage in a little cronyism, i.e., by hiring workers who happen to be well-connected to the city council (even if they're unqualified) and preventing those crony workers from being fired (even when they do shoddy work).

Suppose Acme has just decided to hire the mayor's nephew Robert as a business analyst.[3] Robert isn't even remotely qualified for the role, but it's nevertheless in Acme's interests to hire him.

He'll "earn his keep" not by doing good work, but by keeping the mayor off the company's back.

Now suppose we were to check in on Robert six months later. If we didn't already know he was a crony, we might easily mistake him for a regular employee. We'd find him making spreadsheets, attending meetings, drawing a salary: all the things employees do.

But if we look carefully enough — not at Robert per se, but at the way the company treats him — we're liable to notice something fishy. He's terrible at his job, and yet he isn't fired. Everyone cuts him slack and treats him with kid gloves. The boss tolerates his mistakes and even works overtime to compensate for them.

God knows, maybe he's even promoted.

Clearly Robert is a different kind of employee, a different breed. The way he moves through the company is strange, as if he's governed by different rules, measured by a different yardstick. He's in the meritocracy, but not of the meritocracy.

And now the point of this whole analogy.

I contend that the best way to understand all the crazy beliefs out there — aliens, conspiracies, and all the rest — is to analyze them as crony beliefs. Beliefs that have been "hired" not for the legitimate purpose of accurately modeling the world, but rather for social and political kickbacks.

People are embraced or condemned according to their beliefs, so one function of the mind may be to hold beliefs that bring the belief-holder the greatest number of allies, protectors, or disciples, rather than beliefs that are most likely to be true.

In other words, just like Acme, the human brain has to strike an awkward balance between two different reward systems:

- Meritocracy, where we monitor beliefs for accuracy out of fear that we'll stumble by acting on a false belief; and

- Cronyism, where we don't care about accuracy so much as whether our beliefs make the right impressions on others.

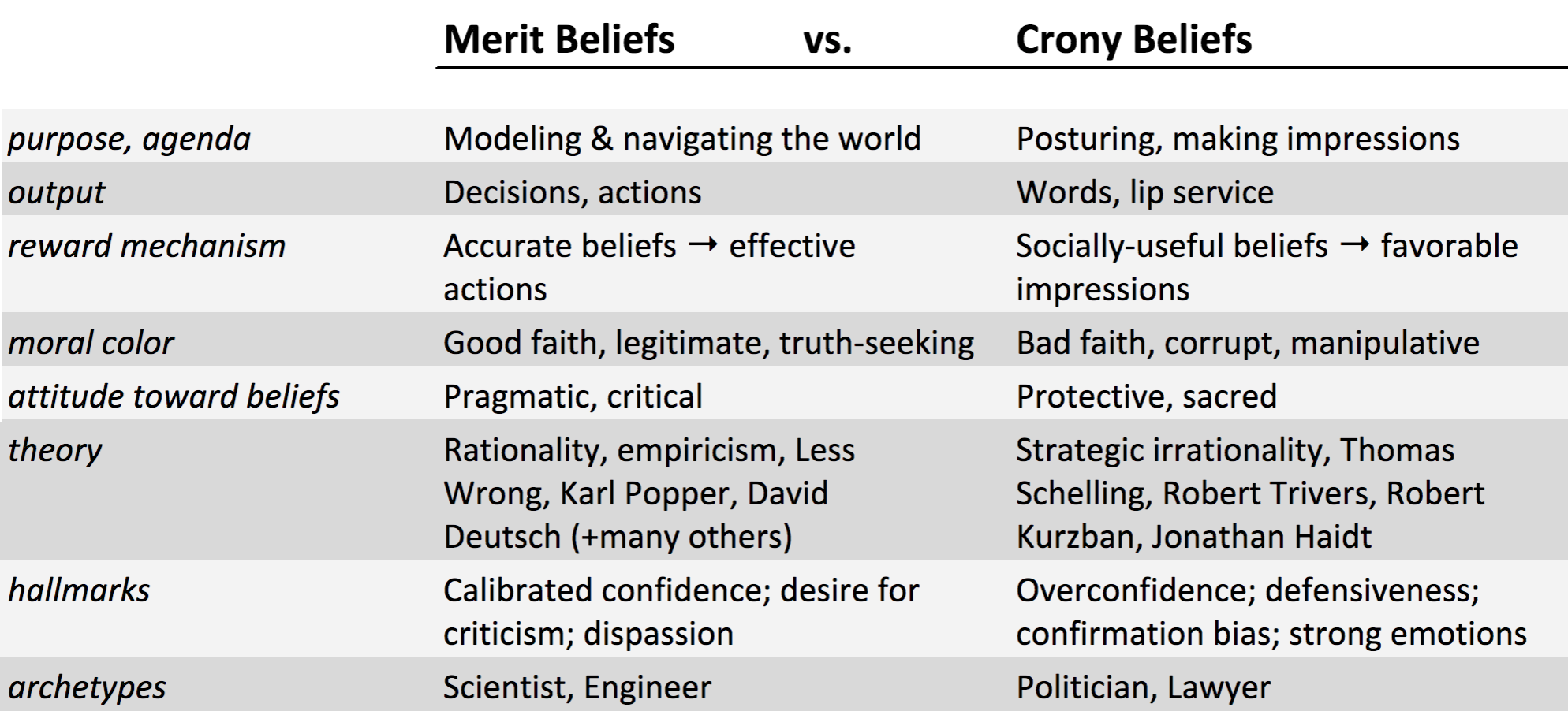

And so we can roughly (with caveats we'll discuss in a moment) divide our beliefs into merit beliefs and crony beliefs. Both contribute to our bottom line — survival and reproduction — but they do so in different ways: merit beliefs by helping us navigate the world, crony beliefs by helping us look good.

The point is, our brains are incredibly powerful organs, but their native architecture doesn't care about high-minded ideals like Truth. They're designed to work tirelessly and efficiently — if sometimes subtly and counterintuitively — in our self-interest.

So if a brain anticipates that it will be rewarded for adopting a particular belief, it's perfectly happy to do so, and doesn't much care where the reward comes from — whether it's pragmatic (better outcomes resulting from better decisions), social (better treatment from one's peers), or some mix of the two.

A brain that didn't adopt a socially-useful (crony) belief would quickly find itself at a disadvantage relative to brains that are more willing to "play ball." In extreme environments, like the French Revolution, a brain that rejects crony beliefs, however spurious, may even find itself forcibly removed from its body and left to rot on a pike.

Faced with such incentives, is it any wonder our brains fall in line?

Even mild incentives, however, can still exert pressure on our beliefs. Russ Roberts tells the story of a colleague who, at a picnic, started arguing for an unpopular political opinion — that minimum wage laws can cause harm — whereupon there was a "frost in the air" as his fellow picnickers "edged away from him on the blanket." If this happens once or twice, it's easy enough to shrug off.

But when it happens again and again, especially among people whose opinions we care about, sooner or later we'll second-guess our beliefs and be tempted to revise them.

Mild or otherwise, these incentives are also pervasive. Everywhere we turn, we face pressure to adopt crony beliefs. At work, we're rewarded for believing good things about the company. At church, we earn trust in exchange for faith, while facing severe sanctions for heresy.

In politics, our allies support us when we toe the party line, and withdraw support when we refuse. (When we say politics is the mind-killer, it's because these social rewards completely dominate the pragmatic rewards, and thus we have almost no incentive to get at the truth.) Even dating can put untoward pressure on our minds, insofar as potential romantic partners judge us for what we believe.

If you've ever wanted to believe something, ask yourself where that desire comes from. Hint: it's not the desire simply to believe what's true.

In short: Just as money can pervert scientific research, so everyday social incentives have the potential to distort our beliefs.

POSTURING

So far we've been describing our brains as "responding to incentives," which gives them a passive role. But it can also be helpful to take a different perspective, one in which our brains actively adopt crony beliefs in order to strategically influence other people. In other words, we use crony beliefs to posture.

Here are a few of the agendas we can accomplish with our beliefs:

- Blending in. Often it's useful to avoid drawing attention to ourselves; as Voltaire said, "It is dangerous to be right in matters on which the established authorities are wrong." In which case, we'll want to adopt ordinary or common beliefs.

- Sticking out. In other situations, it might be better to bristle and hold unorthodox beliefs, in order to demonstrate that we're independent thinkers or that we don't cow to authority. This is similar to the biological strategy of aposematism, and I suspect it's one of the key motives driving people to conspiracy theories and other contrarian beliefs.

- Sucking up. Being a yes-man or –woman, or otherwise adopting beliefs that flatter those with power, is an established tactic for cozying up to authority figures.Similarly — though we don't think of it as "sucking up" — we often use beliefs to demonstrate loyalty, both to individuals ("My son would never do something like that") as well as to entire communities ("The Raiders are definitely going to win tonight").[4] Just as we bow, kneel, and prostrate before rulers and altars, we also have means of humbling ourselves epistemically, e.g., by adopting beliefs that privilege others' interests over our own.

- Showing off, AKA signaling. We can use our beliefs to show off many of our cognitive or psychological qualities: intelligence, kindness, openness, cleverness, etc.I'm sure Elon Musk impressed a lot of people with his willingness to entertain the mind-bending idea that our universe is merely a simulation; whether he's right or wrong is largely incidental.[5]

- Cheerleading. Here the idea is to believe what you want other people to believe — in other words, believing your own propaganda, drinking your own Kool-aid. Over-the-top self-confidence, for example, seems dangerous as a private merit belief, but makes perfect sense as a crony belief, if expressing it inspires others to have confidence in you.

- Jockeying for high ground. This might mean the moral high ground ("Effective Altruism is the only kind of altruism worth doing") or some kind of social high ground ("New York has so much more culture than San Francisco").

It's also important to remember that we have many different audiences to posture and perform in front of. These include friends, family, neighbors, classmates, coworkers, people at church, other parents at our kids' preschool, etc.

And a belief that helps us with one audience might hurt us with another.

Behind every crony belief, then, lies a rat's nest of complexity. In practice, this means we can never know (with any certainty) what caused a given belief to be adopted, at least not from the outside.

There are simply too many different incentives — too many possible postures in front of too many audiences — to try to calculate how a given belief might be in someone's best interest.

Only the brain of the believer is in a position to weigh all the tradeoffs involved — and even then it might make a mistake. When discussing specific beliefs, then, we must proceed with extreme caution and humility.

In fact, it's probably best to stick to stereotypes and generalizations.

WHAT'S THE POINT?

Now, in some sense, all of this is so obvious as to hardly be worth stating. Of course our brains respond to social incentives; everyone knows this from first-hand experience. (Don't we?) In another sense, however, I worry that the social influences on our beliefs are sorely underappreciated.

I, for one, typically explain my own misbeliefs (as well as those I see in others) as rationality errors, breakdowns of the meritocracy. But what I'm arguing here is that most of these misbeliefs are features, not bugs.

What looks like a market failure is actually crony capitalism. What looks like irrationality is actually streamlined epistemic corruption.

In fact, I'll go further. I contend that social incentives are the root of all our biggest thinking errors.

(For what it's worth, I don't have a ton of confidence in this assertion. I just think it's a reasonable hypothesis and worth putting forward, if for no other purpose than to draw out good counterarguments or counterexamples.)

Let me elaborate. Suppose we weren't Homo sapiens but Solo sapiens, a hypothetical species as intelligent as we are today, but with no social life whatsoever (perhaps descended from orangutans?). In that case, it's my claim that our minds would be clean, efficient information-processing machines — straightforward meritocracies trying their best to make sense of the world.

Sure we would continue to make mistakes here and there, owing to all the usual factors: imperfect information, limited time, limited brainpower. But those mistakes would be small, random, and correctable.

Unfortunately, as Homo sapiens, our mistakes are stubborn, systematic, and (in some cases) exaggerated by runaway social feedback loops. And this, I claim, is because our lives are teeming with other people. The trouble with people is that they have partial visibility into our minds, and they sometimes reward us for believing falsehoods and/or punish us for believing the truth. This is why we're tempted to participate in epistemic corruption — to think in bad faith.

RESPONSIBLE USAGE

Before we go any further, a brief warning.

One of my main goals for writing this essay has been to introduce two new concepts — merit beliefs and crony beliefs — that I hope make it easier to talk and reason about epistemic problems. But unless we're careful, these concepts can do more harm than good.

First, it's important to remember that merit beliefs aren't necessarily true, nor are crony beliefs necessarily false. What distinguishes the two concepts is how we're rewarded for them: via effective actions or via social impressions.

The best we can say is that merit beliefs are more likely to be true.

The other important subtlety is that a given belief can serve both pragmatic and social purposes at the same time — just like Robert could theoretically be a productive employee, even while he's the mayor's nephew. Suppose I were to believe that "My team is capable and competent," for example.

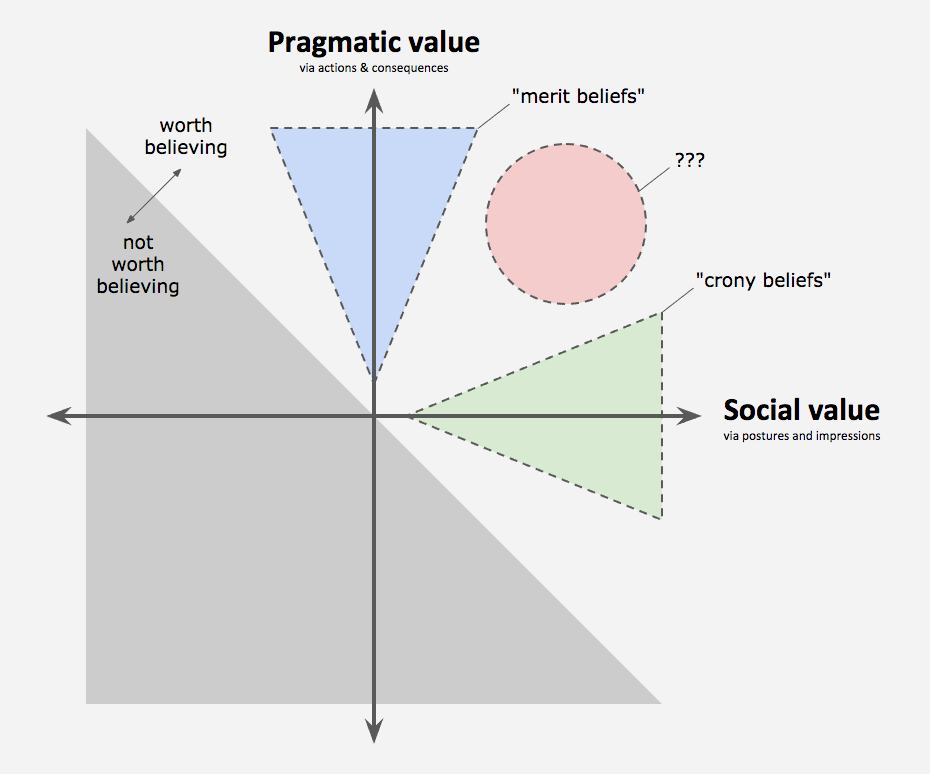

This has pragmatic value, insofar as it contributes to my decision to stay on the team (vs. looking for a new one), but it also has social value, insofar as my team is more likely to trust me when I express this belief. So is it a "merit belief" or a "crony belief"? Mu. When labels break down, it's wise to taboo them in order to get back in touch with the underlying reality — which in our case, looks something like this:

When we call something a "merit belief," then, we're claiming that its pragmatic value far outstrips its social value. And if we call something a "crony belief," we're claiming the reverse.

But it's important to remember that the underlying belief-space is two-dimensional, and that a given belief can fall at any point within that space.

With that in mind, I think it's safe to proceed.

IDENTIFYING CRONY BELIEFS

Now for the $64,000 question: How can we identify which of our beliefs are cronies?

What makes this task difficult is that crony beliefs are designed to mimic ordinary merit beliefs. That said, something in our brains has to be aware — dimly, at least — of which beliefs are cronies, or else we wouldn't be able to give them the coddling that they need to survive inside an otherwise meritocratic system.

(If literally no one at Acme knew that Robert was a crony employee, he'd quickly be fired.) The trick, then, is to look for differences in how merit beliefs and crony beliefs are treated by the brain.

From first principles, we should expect ordinary beliefs to be treated with level-headed pragmatism. They have only one job to do — model the world — and when they do it poorly, we suffer. This naturally leads to such attitudes as a fear of being wrong and even an eagerness to be criticized and corrected. As Karl Popper and (more recently) David Deutsch have argued, knowledge can't exist without criticism.

If we want to be right in the long run, we have to accept that we'll often be wrong in the short run, and be willing to do the needful thing, i.e., discard questionable beliefs. This may sound vaguely heroic or psychologically difficult, somehow, but it's not.

A meritocracy experiences no anguish in letting go of a misbelief and adopting a better one, even its opposite. In fact, it's a pleasure. If I believe that my daughter's soccer game starts at 6pm, but my neighbor informs me that it's 5pm, I won't begrudge his correction — I'll be downright grateful.

Crony beliefs, on the other hand, get an entirely different treatment. Since we mostly don't care whether they're making accurate predictions, we have little need to seek out criticism for them.

(Why would Acme bother monitoring Robert's performance if they never intend to fire him?) Going further, crony beliefs actually need to be protected from criticism. It's not that they're necessarily false, just that they're more likely to be false — but either way, they're unlikely to withstand serious criticism. Thus we should expect our brains to take an overall protective or defensive stance toward our crony beliefs.

Here, then, is a short list of features that crony beliefs will tend to have, relative to good-faith merit beliefs:

- Abstract and impractical.Merit beliefs have value only insofar as we're able to make use of them for choosing actions; we need some "skin in the game." If a belief isn't actionable, or if the actions we might take based on the belief (e.g., voting) don't provide material benefits one way or the other, then it's more likely to be a crony.

- Benefit of the doubt. When we have social incentives to believe something, we stack the deck in its favor. Or to use another metaphor, we put our thumbs on the scale as we weigh the evidence.Blind faith — religious, political, or otherwise — is simply "benefit of the doubt" taken to its logical extreme.

- Conspicuousness. The whole point of a crony belief is to reap social and political rewards, but in order to get these rewards, we need to advertise the belief in question. So the greater our urge to talk about a belief, to wear it like a badge, the more likely it is to be a crony.

- Overconfidence. Related to the above, crony beliefs will typically provide more social value the more confident we seem in them.(If Acme hires the mayor's nephew, but seems constantly on the verge of firing him, the mayor isn't going to be happy.) Overconfidence also acts as a form of protection for beliefs that can't survive on their own within the meritocracy.

- Reluctance to bet. Betting on a belief is just as good as acting on it; both mechanisms create incentives for accuracy. If we're reluctant to bet on a belief, then, it's often because some parts of our psyche know that the belief is unlikely to be true. Hence the challenge: "Put up or shut up."

But perhaps the biggest hallmark of epistemic cronyism is exhibiting strong emotions, as when we feel proud of a belief, anguish over changing our minds, or anger at being challenged or criticized.

These emotions have no business being within 1000ft of a meritocratic belief system — but of course they make perfect sense as part of a crony belief system, where cronies need special protection in order to survive the natural pressures of a meritocracy.

J'ACCUSE

Now for the uncomfortable part: wherein I accuse you, dear reader, of cronyism.

Specifically, I charge you with harboring a crony belief about climate change. I don't care whether you subscribe to the scientific consensus or whether you think it's all a hoax — or even whether you hold some nuanced position.

Unless you're radically uncertain, your belief is a crony.

"But," I imagine you might object, "my belief is based on evidence and careful reasoning. I've read in-depth on climate science and confronted every criticism of my position. Hell, I've even changed my own mind on this topic! I used to believe X, but now I believe Y."

Here's the problem: I'm not accusing your belief of being false; I'm accusing it of being a crony. And no appeal to evidence or careful reasoning, or even felt sincerity, can rebut this accusation.

What makes for a crony belief is how we're rewarded for it. And the problem with beliefs about climate change is that we have no way to act on them — by which I mean there are no actions we can take whose payoffs (for us as individuals) depend on whether our beliefs are true or false.

The rare exception would be someone living near the Florida coast, say, who moves inland to avoid predicted floods. (Does such a person even exist?) Or maybe the owner of a hedge fund or insurance company who places bets on the future evolution of the climate.

But for the rest of us, our incentives come entirely from other people, from the way they judge us for what we believe and say. And thus our beliefs about climate change are little more than hot air (pun intended).

Of course there's nothing special about climate change. We find similar bad-faith incentives among many different types of beliefs:

- Political beliefs, like whether gun control will save lives or which candidate will lead us to greater prosperity.

- Religious beliefs, like whether God approves of birth control or whether Islam is a "religion of peace."

- Ethical beliefs, like whether animals should have legal personhood or how to answer the various trolley problems.

- Beliefs about the self, like "I'm a good person," or "I have free will."

- Beliefs about identity groups, like whether men and women have statistically different aptitudes or whether certain races are mistreated by police.

Since it's all but impossible to act on these beliefs, there are no legitimate sources of reward. Meanwhile, we take plenty of social kickbacks for these beliefs, in the form of the (hopefully favorable) judgments others make when we profess them.

In other words, they're all cronies.

So then: is it hopeless? Is "believing things" a lost cause in anything but the most quotidian of domains?

Certainly I've had my moments being wracked by such despair. But perhaps there's a sliver of hope.

BETTER INCENTIVES

Conventional wisdom holds that the way to more accurate beliefs is "critical thinking." Lock yourself in a room with the Less Wrong sequences (or the equivalent), study the principles of rationality and empiricism, learn about cognitive biases, etc. — and soon you'll be well on your way to a mind reasonably free of falsehoods.

The problem with this approach is that it addresses the symptom (irrationality) without addressing the root cause (social incentives).

Let's return to Acme for a moment. Imagine that some clueless (but well-meaning) executive notices that Robert and a handful of other workers aren't getting fired, despite their shoddy work. Not realizing that they're cronies, the exec naturally suspects the problem is not enough meritocracy. So he launches a campaign to beef up performance standards, replete with checklists, quarterly reviews, oversight committees, management training seminars, etc., etc., etc. — all of which is more likely to hurt the company than to help it.

The two most likely effects are (1) the cronies get fired, and the city council comes down hard on Acme, or (2) the other execs, who are clued in to the cronyism, have to work even harder to protect the cronies from getting fired. In other words, Acme suffers more "corporate dissonance."

Now if our executive crusader understood the full picture, he might instead direct his efforts outside the company, at the political ecosystem that allows strong-arming and corruption to fester. If he could fix Nepotsville city politics, he'd be quashing the problem at its root, and Acme's meritocracy could then begin to heal naturally.

Back in the belief domain, it's similarly clueless (if well-meaning) to focus on beefing up the "meritocracy" within an individual mind. If you give someone the tools to purge their crony beliefs without fixing the ecosystem in which they're embedded, it's a prescription for trouble. They'll either (1) let go of their crony beliefs (and lose out socially), or (2) suffer more cognitive dissonance in an effort to protect the cronies from their now-sharper critical faculties.

The better — but much more difficult — solution is to attack epistemic cronyism at the root, i.e., in the way others judge us for our beliefs.

If we could arrange for our peers to judge us solely for the accuracy of our beliefs, then we'd have no incentive to believe anything but the truth.

In other words, we do need to teach rationality and critical thinking skills — not just to ourselves, but to everyone at once.

The trick is to see this as a multilateral rather than a unilateral solution.[6] If we raise epistemic standards within an entire population, then we'll all be cajoled into thinking more clearly — making better arguments, weighing evidence more evenhandedly, etc.

— lest we be seen as stupid, careless, or biased.

The beauty of Less Wrong, then, is that it's not just a textbook: it's a community. A group of people who have agreed, either tacitly or explicitly, to judge each other for the accuracy of their beliefs — or at least for behaving in ways that correlate with accuracy. And so it's the norms of the community that incentivize us to think and communicate as rationally as we do.

All of which brings us to a strange and (at least to my mind) unsettling conclusion. Earlier I argued that other people are the cause of all our epistemic problems. Now I find myself arguing that they're also our best solution.

_____

Appendix

Yes, I've sunk to writing an appendix to a blog post. Click here to read it, especially if you're interested in these two questions: (1) What actual evidence do we have that our brains are engaging in cronyism? and (2) Why don't we simply lie (instead of internalizing crony beliefs)?

_____

Thanks to Mills Baker and Robin Hanson for their comments on previous drafts of this essay.

Further reading:

- Robin Hanson, Are Beliefs Like Clothes? Probably the original statement of the dual function of beliefs.

- Sarah Perry, The Art of the Conspiracy Theory. Key quote: "The conspiracy theory is an active, creative art form, whose truth claims serve as formal obstructions rather than being the primary point of the endeavor."

Endnotes:

[1] beliefs. Pedantic note for philosopher-types: When I talk about "beliefs" in this essay, I'm referring to representations that make up our brain's internal map or model of the world. Beliefs aren't always true, of course, but what's important is that we treat them as though they were true. Beliefs also come with degrees of certainty: confident, hesitant, or anywhere in between. Sometimes we represent beliefs explicitly in words, e.g., "Bill Clinton was the 42nd president of the United States." Most of our beliefs, however, are entirely implicit.

When we sit down at a desk, for example, we don't have to tell ourselves, "There's a chair behind me"; we simply fall backwards and expect the chair to catch us.

The point is, I'm using "beliefs" as an umbrella term to cover many related concepts: ideas, knowledge, convictions, opinions, predictions, and expectations; in this essay, they're all getting lumped together.

[2] accurate information. To be maximally precise, we don't need our beliefs to be accurate so much as we need them to be expedient. If a belief is accurate but too complex to act on, it's a liability. That's why we adopt heuristic beliefs: quick and dirty approximations that are accurate enough to produce good outcomes.

Expedience may also explain some of our perceptual biases, like when we hear a strange noise in the woods and jump to thinking it's a person or animal. Statistically, strange sounds are more likely to be caused by wind or a falling tree branch. But when the outcomes (of false positives vs. false negatives) are so skewed, it can behoove us to believe a likely falsehood.

[3] Robert. I've taken the liberty of naming our crony after the three Roberts famous for their writings on self-deception: Trivers, Kurzban, and Wright.

[4] showing loyalty. Note from the Etymology Fairy: the English words truth, faith, and veracity all derive from roots meaning "to trust." As in, "You trust me, don't you?"

[5] Elon Musk. Actually he may have lost respect, on net, for making the simulation argument. Either way, I'm pretty sure he (or his brain) thought it would impress people.

[6] unilateral rationality. There is an argument for teaching rationality to individuals in isolation, which is that our minds may be so thoroughly corrupted by social incentives that they can't reason effectively even in the absence of those incentives — in which case, rationality may fix the corruption, but at the cost of some serious social side effects.